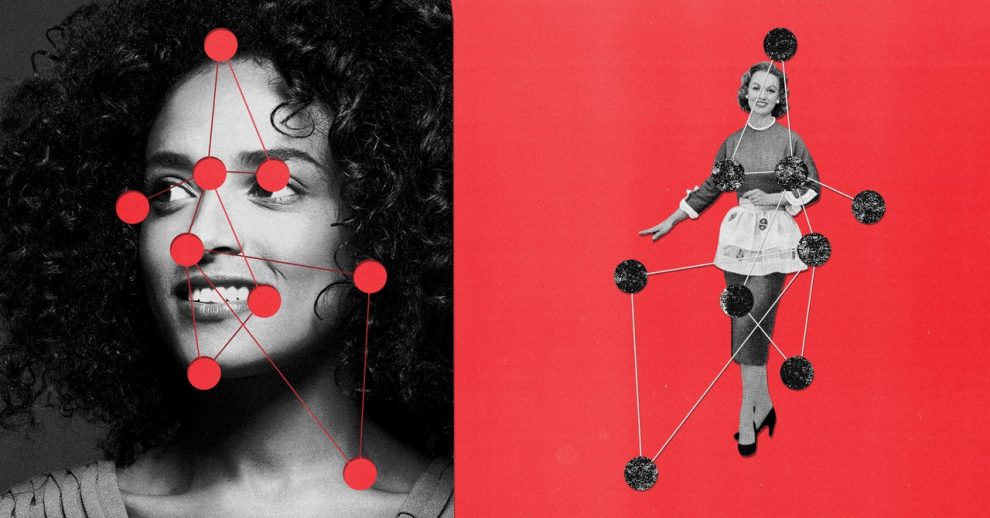

Men often judge women by their appearance. Turns out, computers do too.

When US and European researchers fed pictures of congressmembers to Google’s cloud image recognition service, the service applied three times as many annotations related to physical appearance to photos of women as it did to men. The top labels applied to men were “official” and “businessperson;” for women they were “smile” and “chin.”

“It results in women receiving a lower status stereotype: That women are there to look pretty and men are business leaders,” says Carsten Schwemmer, a postdoctoral researcher at GESIS Leibniz Institute for the Social Sciences in Köln, Germany. He worked on the study, published last week, with researchers from New York University, American University, University College Dublin, University of Michigan, and nonprofit California YIMBY.

The researchers administered their machine vision test to Google’s artificial intelligence image service and those of rivals Amazon and Microsoft. Crowdworkers were paid to review the annotations those services applied to official photos of lawmakers, and images those lawmakers tweeted.

Google’s AI image recognition service tended to see men like senator Steve Daines as businesspeople, but tagged women lawmakers like Lucille Roybal-Allard with terms related to their appearance.

The AI services generally saw things human reviewers could also see in the photos. But they tended to notice different things about women and men, with women much more likely to be characterized by their appearance. Women lawmakers were often tagged with “girl” and “beauty.” The services had a tendency not to see women at all, failing to detect them more often than they failed to see men.

The study adds to evidence that algorithms do not see the world with mathematical detachment but instead tend to replicate or even amplify historical cultural biases. It was inspired in part by a 2018 project called Gender Shades that showed that Microsoft’s and IBM’s AI cloud services were very accurate at identifying the gender of white men, but very inaccurate at identifying the gender of Black women.

The new study was published last week, but the researchers had gathered data from the AI services in 2018. Experiments by WIRED using the official photos of 10 men and 10 women from the California state Senate suggest the study’s findings still hold.

Amazon’s image processing service Rekognition tagged images of some women California state senators including Ling Ling Chang, a Republican, as “girl” or “kid” but didn’t apply similar labels to men lawmakers.

Amazon and Microsoft’s services appeared to show less obvious bias, although Amazon reported being more than 99 percent sure that two of the 10 women senators were either a “girl” or “kid.” It didn’t suggest any of the 10 men were minors. Microsoft’s service identified the gender of all the men, but only eight of the women, calling one a man and not tagging a gender for another.

Google switched off its AI vision service’s gender detection earlier this year, saying that gender cannot be inferred from a person’s appearance. Tracy Frey, managing director of responsible AI at Google’s cloud division, says the company continues to work on reducing bias and welcomes outside input. “We always strive to be better and continue to collaborate with outside stakeholders—like academic researchers—to further our work in this space,” she says. Amazon and Microsoft declined to comment; both companies’ services recognize gender only as binary.

The US-European study was inspired in part by what happened when the researchers fed Google’s vision service a striking, award-winning image from Texas showing a Honduran toddler in tears as a US Border Patrol officer detained her mother. Google’s AI suggested labels including “fun,” with a score of 77 percent, higher than the 52 percent score it assigned the label “child.” WIRED got the same suggestion after uploading the image to Google’s service Wednesday.

Schwemmer and his colleagues began playing with Google’s service in hopes it could help them measure patterns in how people use images to talk about politics online. What he subsequently helped uncover about gender bias in the image services has convinced him the technology isn’t ready to be used by researchers that way, and that companies using such services could suffer unsavory consequences. “You could get a completely false image of reality,” he says. A company that used a skewed AI service to organize a large photo collection might inadvertently end up obscuring women businesspeople, indexing them instead by their smiles.

When this image won World Press Photo of the Year in 2019 one judge remarked that it showed “violence that is psychological.” Google’s image algorithms detected “fun.”

This content was originally published here.

EL 2 DE JUNIO DEL 2024 VOTA PARA MANTENER

TU LIBERTAD, LA DEMOCRACIA Y EL RESPETO A LA CONSTITUCIÓN.

VOTA POR XÓCHITL

Comentarios