Over the past decade, we have witnessed the size of machine learning algorithms grow exponentially due to improvements in processor speeds and the advent of big data. Initially, models were small enough to run on local machines using one or more cores within the central processing unit (CPU).

Shortly after, computation using graphics processing units (GPUs) became necessary to handle larger datasets and became more readily available due to introduction of cloud-based services such as SaaS platforms (e.g., Google Colaboratory) and IaaS (e.g., Amazon EC2 Instances). At this time, algorithms could still be run on single machines.

More recently, we have seen the development of specialized application-specific integrated circuits (ASICs) tensor processing units (TPUs) which can pack the power of ~8 GPUs. These devices have been augmented with the ability to distribute learning across multiple systems in an attempt to grow larger and larger models.

This came to a head recently with the release of the GPT-3 algorithm (released in May 2020), boasting a network architecture containing a staggering 175 billion neurons — more than double the number present in the human brain (~85 billion). This is more than 10x the number of neurons than the next-largest neural network ever created, Turing-NLG (released in February 2020, containing ~17.5 billion parameters). Some estimates claim that the model cost around $10 million dollars to train and used approximately 3 GWh of electricity (approximately the output of three nuclear power plants for an hour).

While the achievements of GPT-3 and Turing-NLG are laudable, naturally, this has led to some in the industry to criticize the increasingly large carbon footprint of the AI industry. However, it has also helped to stimulate interest within the AI community towards more energy-efficient computing. Such ideas, like more efficient algorithms, data representations, and computation have been the focus of a seemingly unrelated field for several years: tiny machine learning.

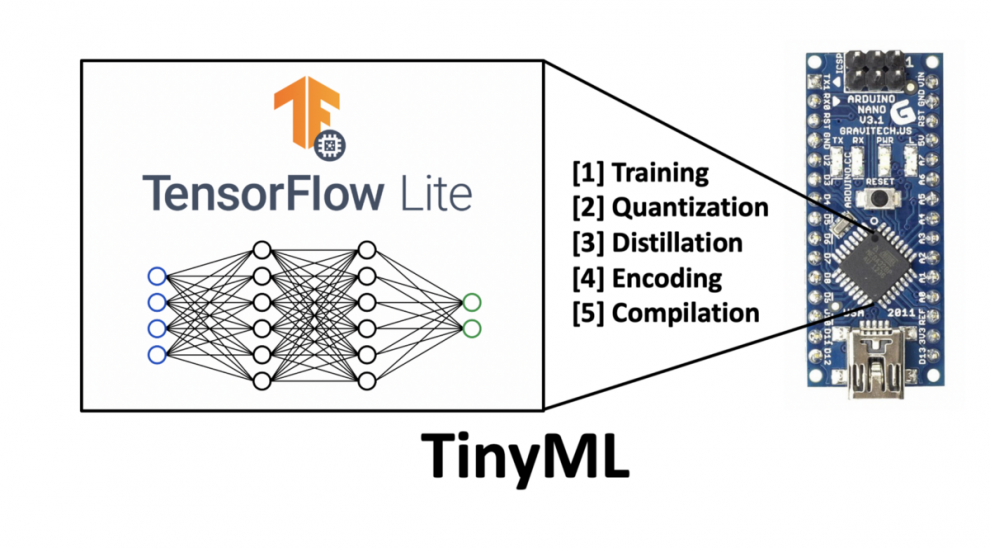

Tiny machine learning (tinyML) is the intersection of machine learning and embedded internet of things (IoT) devices. The field is an emerging engineering discipline that has the potential to revolutionize many industries.

The main industry beneficiaries of tinyML are in edge computing and energy-efficient computing. TinyML emerged from the concept of the internet of things (IoT). The traditional idea of IoT was that data would be sent from a local device to the cloud for processing. Some individuals raised certain concerns with this concept: privacy, latency, storage, and energy efficiency to name a few.

Energy Efficiency. Transmitting data (via wires or wirelessly) is very energy-intensive, around an order of magnitude more energy-intensive than onboard computations (specifically, multiply-accumulate units). Developing IoT systems that can perform their own data processing is the most energy-efficient method. AI pioneers have discussed this idea of “data-centric” computing (as opposed to the cloud model’s “compute-centric”) for some time and we are now beginning to see it play out.

Privacy. Transmitting data opens the potential for privacy violations. Such data could be intercepted by a malicious actor and becomes inherently less secure when warehoused in a singular location (such as the cloud). By keeping data primarily on the device and minimizing communications, this improves security and privacy.

Storage. For many IoT devices, the data they are obtaining is of no merit. Imagine a security camera recording the entrance to a building for 24 hours a day. For a large portion of the day, the camera footage is of no utility, because nothing is happening. By having a more intelligent system that only activates when necessary, lower storage capacity is necessary, and the amount of data necessary to transmit to the cloud is reduced.

Latency. For standard IoT devices, such as Amazon Alexa, these devices transmit data to the cloud for processing and then return a response based on the algorithm’s output. In this sense, the device is just a convenient gateway to a cloud model, like a carrier pigeon between yourself and Amazon’s servers. The device is pretty dumb and fully dependent on the speed of the internet to produce a result. If you have slow internet, Amazon Alexa will also become slow. For an intelligent IoT device with onboard automatic speech recognition, the latency is reduced because there is reduced (if not no) dependence on external communications.

These issues led to the development of edge computing, the idea of performing processing activities onboard of edge devices (devices at the “edge” of the cloud). These devices are highly resource-constrained in terms of memory, computation, and power, leading to the development of more efficient algorithms, data structures, and computational methods.

Such improvements are also applicable to larger models, which may lead to efficiency increases in machine learning models by orders of magnitude with no impact on model accuracy. As an example, the Bonsai algorithm developed by Microsoft can be as small as 2 KB but can have even better performance than a typical 40 MB kNN algorithm, or a 4 MB neural network. This result may not sound important, but the same accuracy on a model 1/10,000th of the size is quite impressive. A model this small can be run on an Arduino Uno, which has 2 KB RAM available — in short, you can now build such a machine learning model on a $5 microcontroller.

We are at an interesting crossroads where machine learning is bifurcating between two computing paradigms: compute-centric computing and data-centric computing. In the compute-centric paradigm, data is stockpiled and analyzed by instances in data centers, while in the data-centric paradigm, the processing is done locally at the origin of the data. Although we appear to be quickly moving towards a ceiling in the compute-centric paradigm, work in the data-centric paradigm has only just begun.

IoT devices and embedded machine learning models are becoming increasingly ubiquitous in the modern world (predicted more than 20 billion active devices by the end of 2020). Many of these you may not even have noticed. Smart doorbells, smart thermostats, a smartphone that “wakes up” when you say a couple of words, or even just pick up the phone. The remainder of this article will focus deeper on how tinyML works, and on current and future applications.

This content was originally published here.

VOTA PARA EVITAR LA DICTADURA

SALVA Al EDOMEX, UNIDOS SOMOS MAYORÍA

TENEMOS SOLO UNA OPORTUNIDAD

EL 4 DE JUNIO DEL 2023 VOTA PARA MANTENER

TU LIBERTAD, LA DEMOCRACIA Y EL RESPETO A LA CONSTITUCIÓN.

SI NO VOTAS PROBABLEMENTE TU VOTO NO VOLVERÁ A CONTAR

UBICA TU CASILLA AQUÍ

EL 2 DE JUNIO DEL 2024 VOTA PARA MANTENER

TU LIBERTAD, LA DEMOCRACIA Y EL RESPETO A LA CONSTITUCIÓN.

VOTA POR XÓCHITL

Comentarios